Machine Learning for Physics and Astronomy

Christoph Weniger

Thursday, 3 March 2022

Chapter 2: The Multi Layer Perceptron

\(\newcommand{\indep}{\perp\!\!\!\perp}\)

Based on the slide decks of Gilles Louppe.

A story of three activation functions

In this lecture I will discuss the role of various activation functions. We will construct a dense deep neural networks, also known as multi layer percetrons.

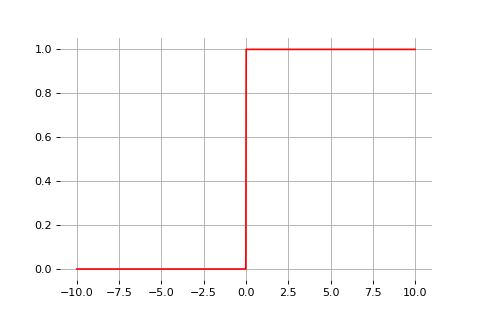

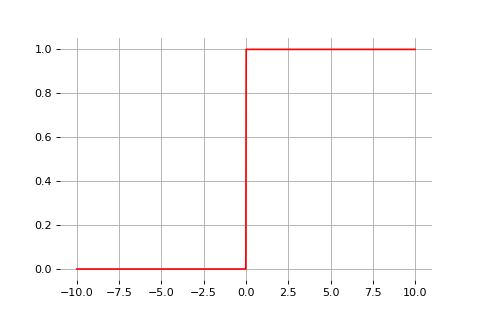

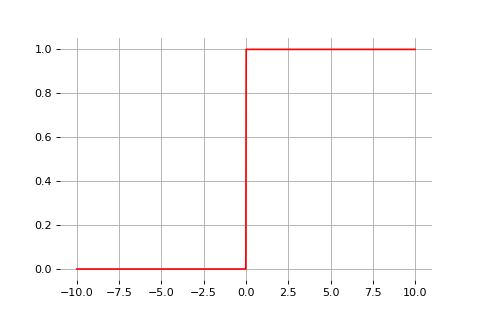

Sign

Sign

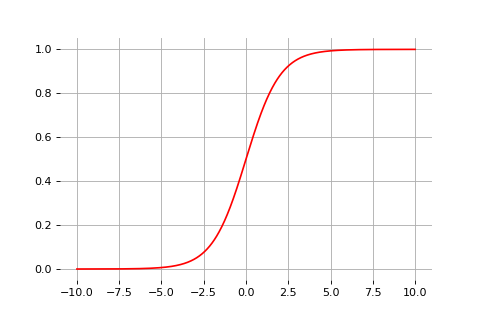

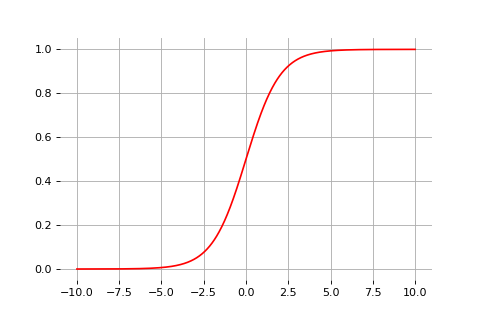

Sigmoid

Sigmoid

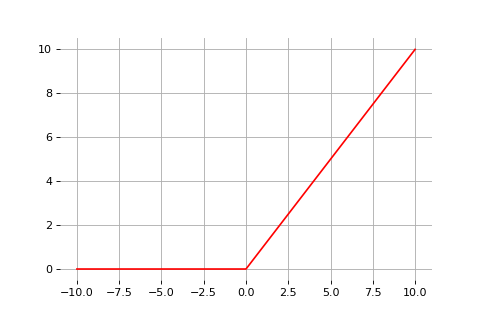

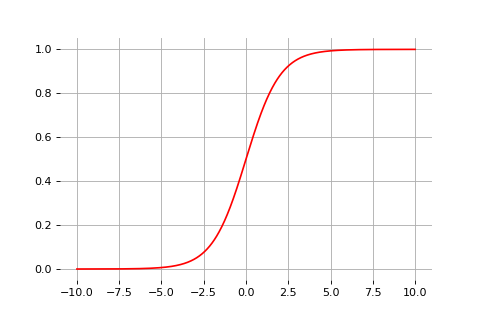

ReLU

ReLU

1) The sign activation function

Perceptron

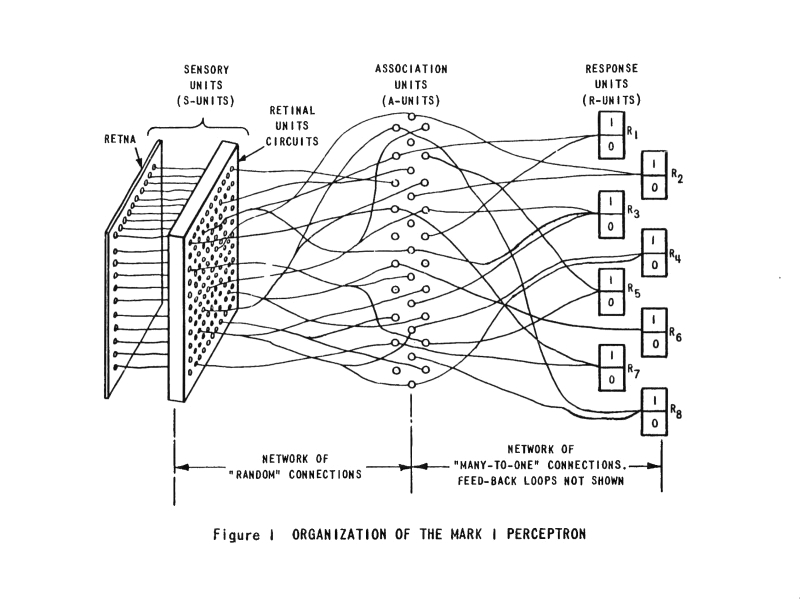

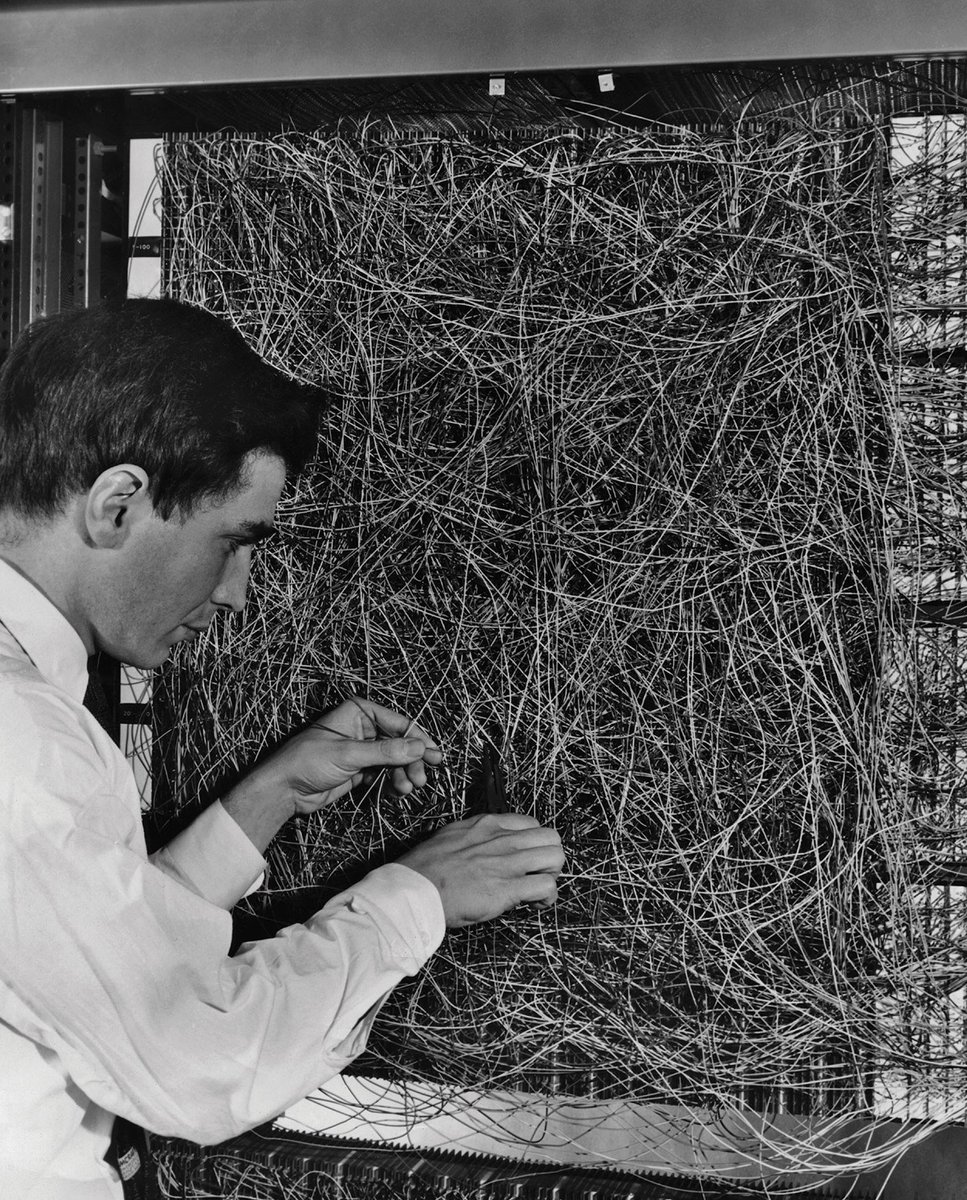

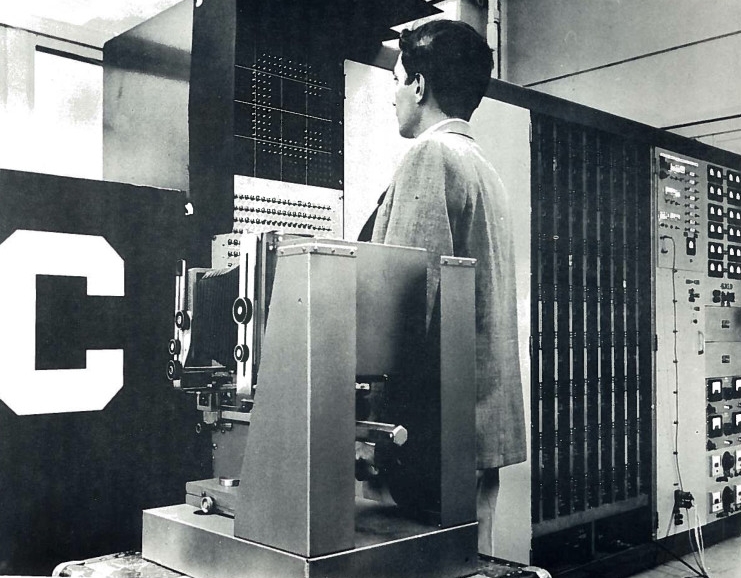

The perceptron model (Rosenblatt, 1957)

\[f(\mathbf{x}) = \begin{cases} 1 &\text{if } \sum_i w_i x_i + b \geq 0 \\ 0 &\text{otherwise} \end{cases}\]

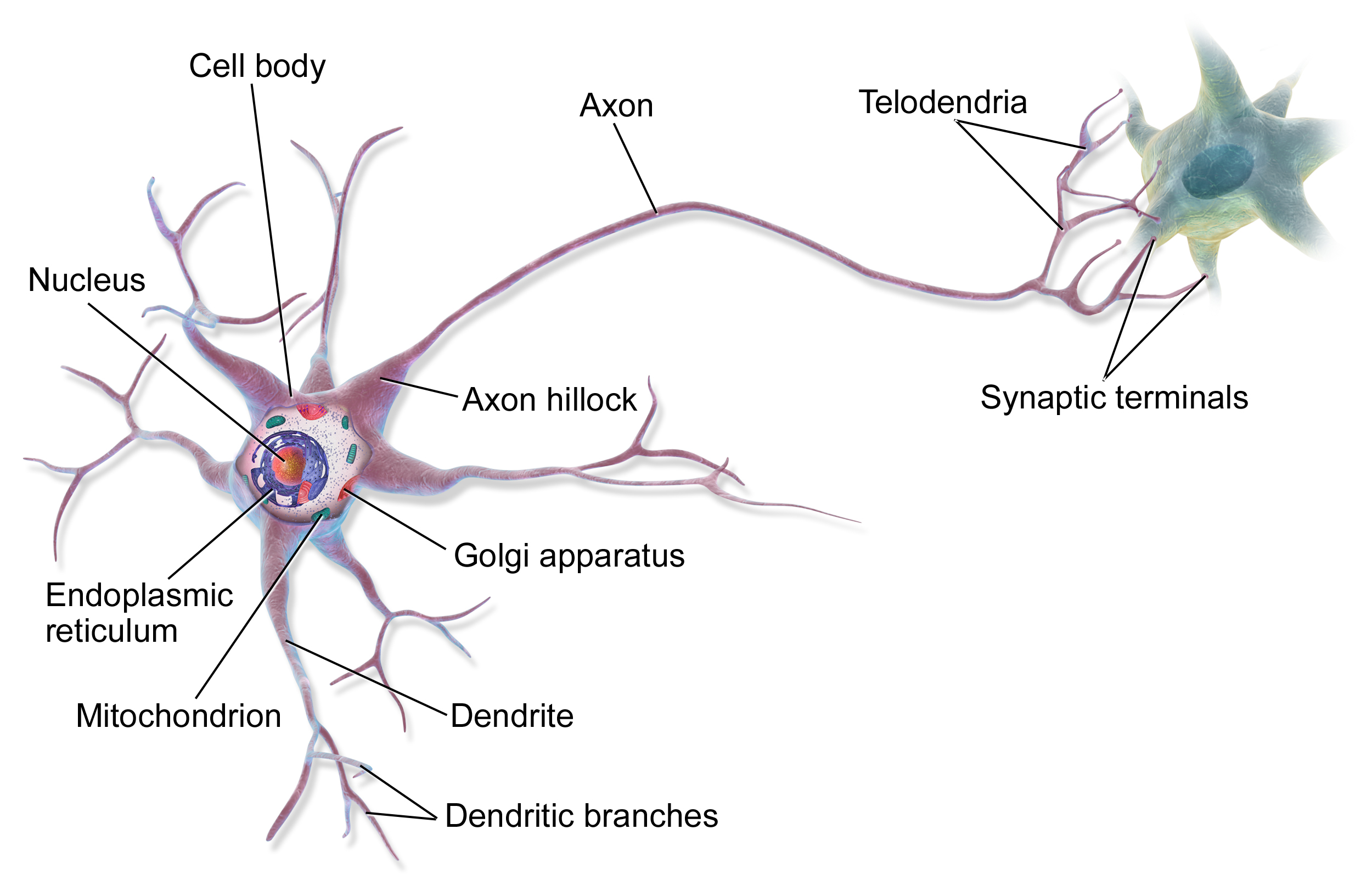

was originally motivated by biology, with \(w_i\) being synaptic weights and \(x_i\) and \(f\) firing rates.

Credits: Frank Rosenblatt, Mark I Perceptron operators’ manual, 1960.

The Mark I Percetron (Frank Rosenblatt).

The Perceptron

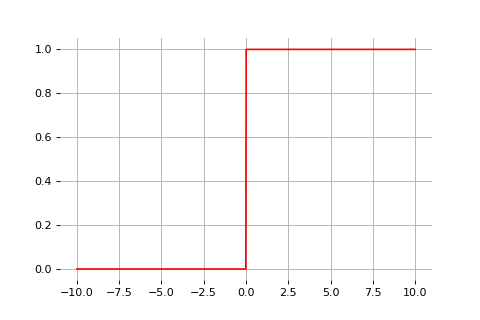

Binary step activation function

Let us define the (non-linear) activation function:

\[\text{sign}(x) = \begin{cases} 1 &\text{if } x \geq 0 \\ 0 &\text{otherwise} \end{cases}\]

The perceptron classification rule can be rewritten as \[f(\mathbf{x}) = \text{sign}(\sum_i w_i x_i + b).\]

Computational graphs

The computation of \[f(\mathbf{x}) = \text{sign}(\sum_i w_i x_i + b)\] can be represented as a computational graph where

- white nodes correspond to inputs and outputs;

- red nodes correspond to model parameters;

- blue nodes correspond to intermediate operations.

Computational graphs

In terms of tensor operations, \(f\) can be rewritten as \[f(\mathbf{x}) = \text{sign}(\mathbf{w}^T \mathbf{x} + b),\] for which the corresponding computational graph of \(f\) is:

2) The sigmoid activation function

The sigmoid function

Extending binary logic with Bayesian probabilities motivates the sigmoid function, \[\sigma(x) = \frac{1}{1 + \exp(-x)}\] which looks like a soft heavyside.

Therefore, an overall model \(f(\mathbf{x};\mathbf{w},b) = \sigma(\mathbf{w}^T \mathbf{x} + b)\) is very similar to the perceptron.

This unit (or variants with other activation functions, see below) is the main primitive of all neural networks!

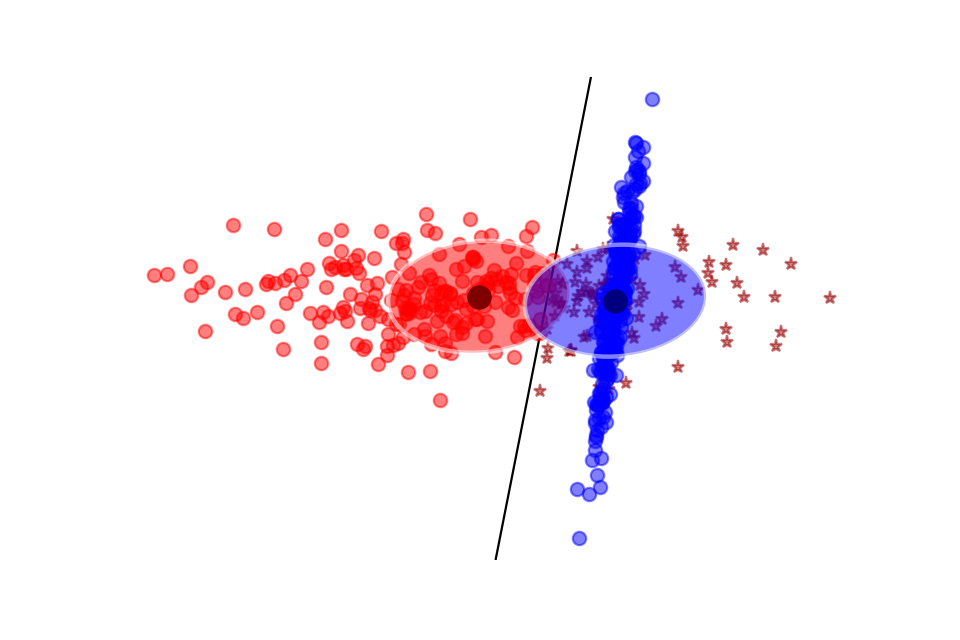

Recap: Logistic regression

(we discussed this already in the classification chapter)

Consider the model \[P(Y=1|\mathbf{x}) = \sigma\left(\mathbf{w}^T \mathbf{x} + b\right)\].

- colored classes correspond to \(Y=1\) and \(Y=0\)

- no model assumptions on class population (Gaussian class populations, homoscedasticity);

- goal: instead, find \(\mathbf{w}, b\) that maximizes the likelihood of the data.

Deep neural networks

Multi-layer perceptron

So far we considered the logistic unit \(h=\sigma\left(\mathbf{w}^T \mathbf{x} + b\right)\), where \(h \in \mathbb{R}\), \(\mathbf{x} \in \mathbb{R}^p\), \(\mathbf{w} \in \mathbb{R}^p\) and \(b \in \mathbb{R}\).

These units can be composed in parallel to form a layer with \(q\) outputs: \[\mathbf{h} = \sigma(\mathbf{W}^T \mathbf{x} + \mathbf{b})\] where \(\mathbf{h} \in \mathbb{R}^q\), \(\mathbf{x} \in \mathbb{R}^p\), \(\mathbf{W} \in \mathbb{R}^{p\times q}\), \(b \in \mathbb{R}^q\) and where \(\sigma(\cdot)\) is upgraded to the element-wise sigmoid function.

Similarly, layers can be composed in series, such that: \[\begin{aligned} \mathbf{h}_0 &= \mathbf{x} \\ \mathbf{h}_1 &= \sigma(\mathbf{W}_1^T \mathbf{h}_0 + \mathbf{b}_1) \\ ... \\ \mathbf{h}_L &= \sigma(\mathbf{W}_L^T \mathbf{h}_{L-1} + \mathbf{b}_L) \\ f(\mathbf{x}; \theta) = \hat{y} &= \mathbf{h}_L \end{aligned}\] where \(\theta\) denotes the model parameters \(\{ \mathbf{W}_k, \mathbf{b}_k, ... | k=1, ..., L\}\).

This model is the multi-layer perceptron, also known as the fully connected feedforward network.

Example: Simplified 2-layer MLP

Let us consider a simplified 2-layer MLP and the following loss function: \[\begin{aligned} f(\mathbf{x}; \mathbf{W}_1, \mathbf{W}_2) &= \sigma\left( \mathbf{W}_2^T \sigma\left( \mathbf{W}_1^T \mathbf{x} \right)\right) \\ \mathcal{\ell}(y, \hat{y}; \mathbf{W}_1, \mathbf{W}_2) &= \text{cross_ent}(y, \hat{y}) + \lambda \left( ||\mathbf{W}_1||_2 + ||\mathbf{W}_2||_2 \right) \end{aligned}\] for \(\mathbf{x} \in \mathbb{R^p}\), \(y \in \mathbb{R}\), \(\mathbf{W}_1 \in \mathbb{R}^{p \times q}\) and \(\mathbf{W}_2 \in \mathbb{R}^q\).

Here the term proportional to \(\lambda\) is some regularization that is sometimes introduced to avoid overfitting of the network weights (similar to what we have seen in linear regression earlier).

In the forward pass, intermediate values are all computed from inputs to outputs, which results in the annotated computational graph below:

The total derivative can be computed through a backward pass, by walking through all paths from outputs to parameters in the computational graph and accumulating the terms. For example, for \(\frac{\text{d} \ell}{\text{d} \mathbf{W}_1}\) we have: \[\begin{aligned} \frac{\text{d} \ell}{\text{d} \mathbf{W}_1} &= \frac{\partial \ell}{\partial u_8}\frac{\text{d} u_8}{\text{d} \mathbf{W}_1} + \frac{\partial \ell}{\partial u_4}\frac{\text{d} u_4}{\text{d} \mathbf{W}_1} \\ \frac{\text{d} u_8}{\text{d} \mathbf{W}_1} &= ... \end{aligned}\]

Let us zoom in on the computation of the network output \(\hat{y}\) and of its derivative with respect to \(\mathbf{W}_1\).

- Forward pass: values \(u_1\), \(u_2\), \(u_3\) and \(\hat{y}\) are computed by traversing the graph from inputs to outputs given \(\mathbf{x}\), \(\mathbf{W}_1\) and \(\mathbf{W}_2\).

- Backward pass: by the chain rule we have \[\begin{aligned} \frac{\text{d} \hat{y}}{\text{d} \mathbf{W}_1} &= \frac{\partial \hat{y}}{\partial u_3} \frac{\partial u_3}{\partial u_2} \frac{\partial u_2}{\partial u_1} \frac{\partial u_1}{\partial \mathbf{W}_1} \\ &= \frac{\partial \sigma(u_3)}{\partial u_3} \frac{\partial \mathbf{W}_2^T u_2}{\partial u_2} \frac{\partial \sigma(u_1)}{\partial u_1} \frac{\partial \mathbf{W}_1^T \mathbf{x}}{\partial \mathbf{W}_1} \end{aligned}\] Note how evaluating the partial derivatives requires the intermediate values computed forward.

- This algorithm is also known as backpropagation.

- An equivalent procedure can be defined to evaluate the derivatives in forward mode, from inputs to outputs.

- Since differentiation is a linear operator, automatic differentiation can be implemented efficiently in terms of tensor operations.

The vanishing gradients problem

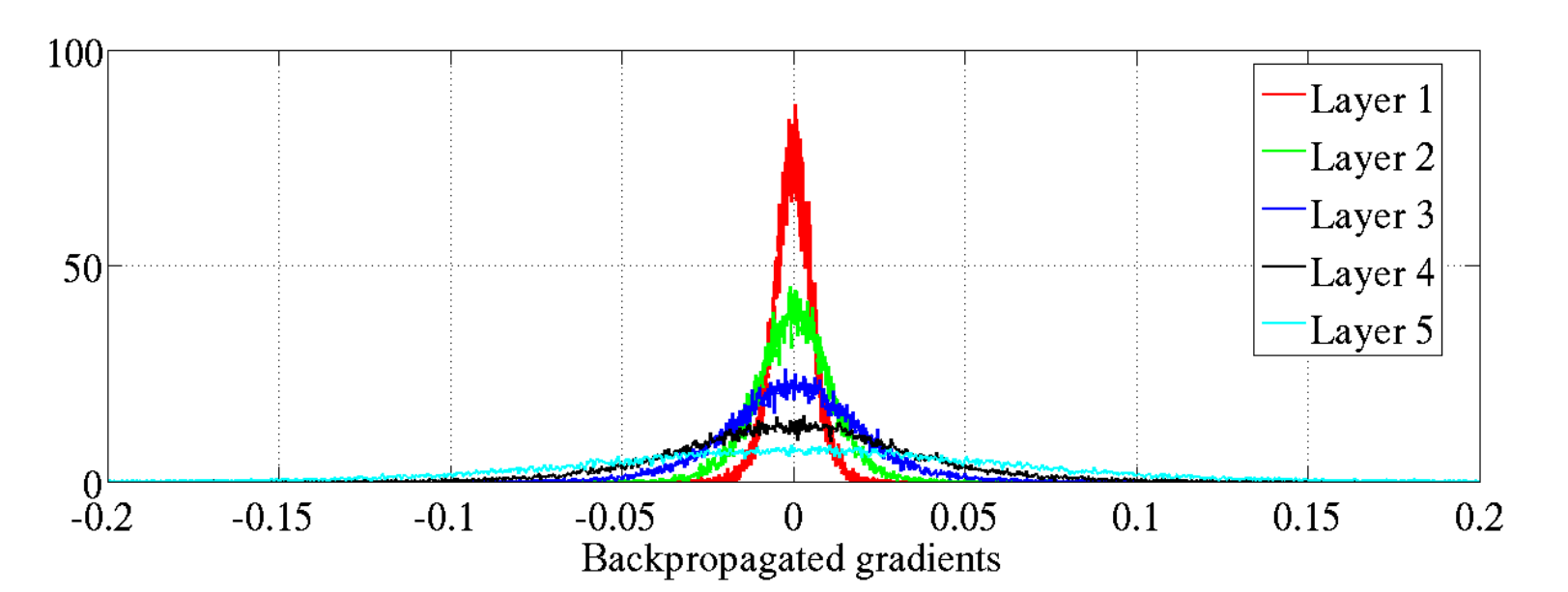

Training deep MLPs with many layers has for long (pre-2011) been very difficult due to the vanishing gradient problem.

- Small gradients slow down, and eventually block, stochastic gradient descent.

- This results in a limited capacity of learning.

Backpropagated gradients normalized histograms (Glorot and Bengio, 2010).

Gradients for layers far from the output vanish to zero.

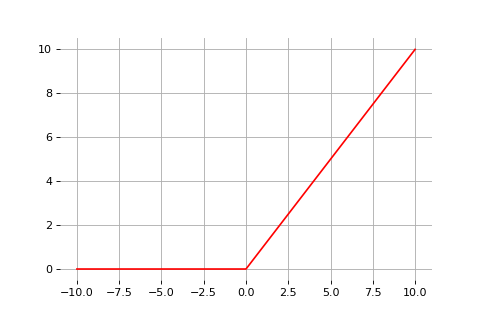

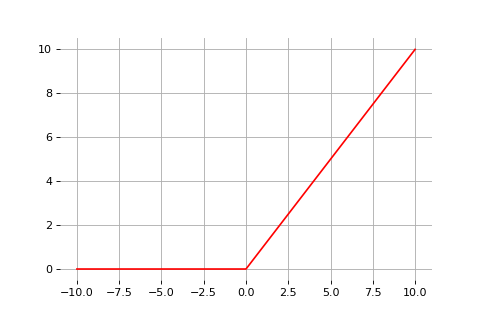

3) The ReLU activation function

Rectified linear units

Instead of the sigmoid activation function, modern neural networks are mostly based on rectified linear units (ReLU) (Glorot et al, 2011):

\[\text{ReLU}(x) = \max(0, x)\]

Note that the derivative of the ReLU function is

\[\frac{\text{d}}{\text{d}x} \text{ReLU}(x) = \begin{cases}

0 &\text{if } x \leq 0 \\

1 &\text{otherwise}

\end{cases}\]

For \(x=0\), the derivative is undefined. In practice, it is set to zero.

Therefore,

\[\frac{\text{d}\hat{y}}{\text{d}w_1} = \underbrace{\frac{\partial \sigma(u_5)}{\partial u_5}}_{= 1} w_3 \underbrace{\frac{\partial \sigma(u_3)}{\partial u_3}}_{= 1} w_2 \underbrace{\frac{\partial \sigma(u_1)}{\partial u_1}}_{= 1} x\]

This solves the vanishing gradient problem, even for deep networks! (provided proper initialization)

Note that:

- The ReLU unit dies when its input is negative, which might block gradient descent.

- This is actually a useful property to induce sparsity.

- This issue can also be solved using leaky ReLUs, defined as \[\text{LeakyReLU}(x) = \max(\alpha x, x)\] for a small \(\alpha \in \mathbb{R}^+\) (e.g., \(\alpha=0.1\)).

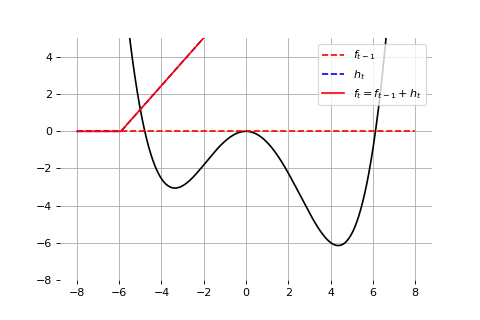

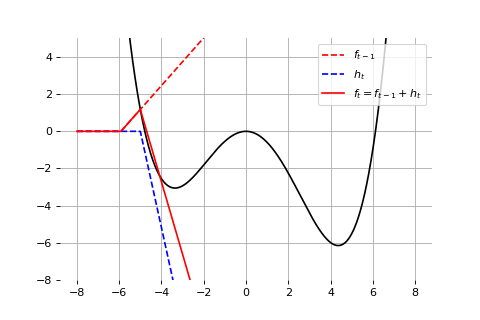

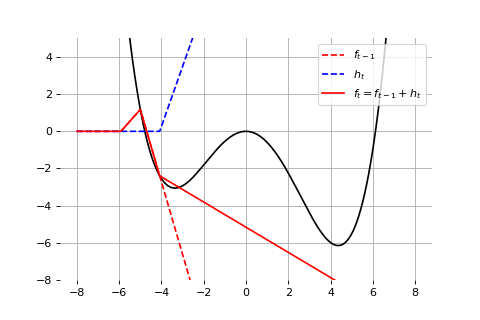

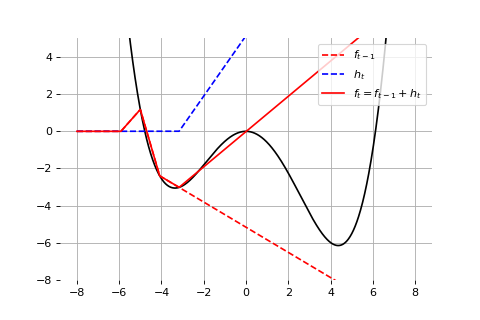

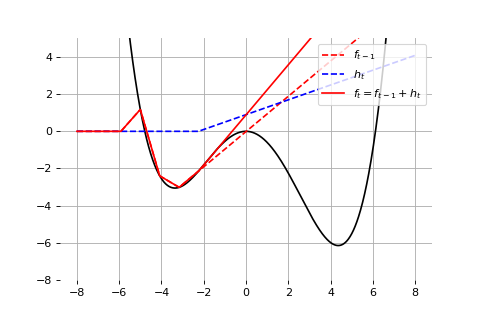

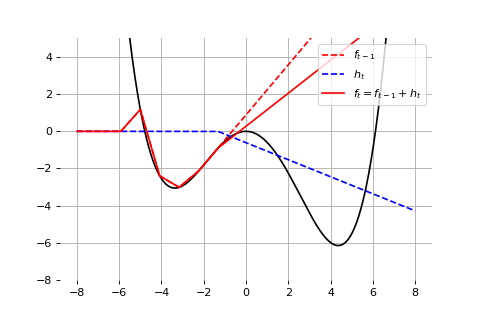

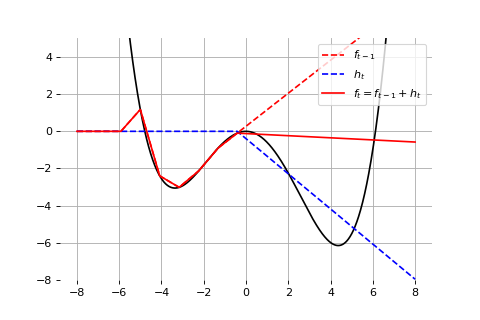

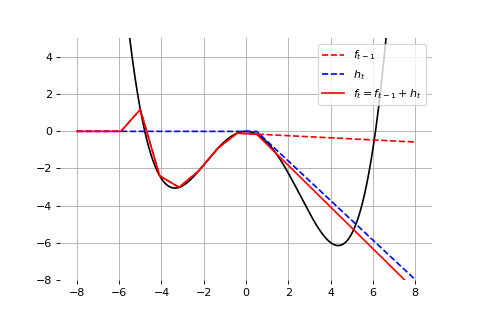

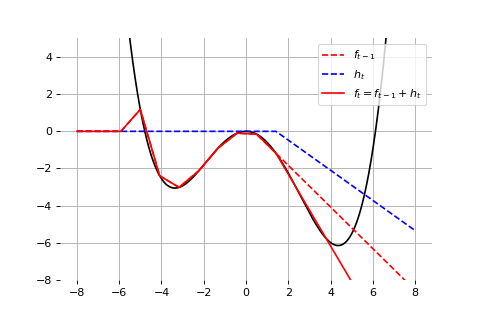

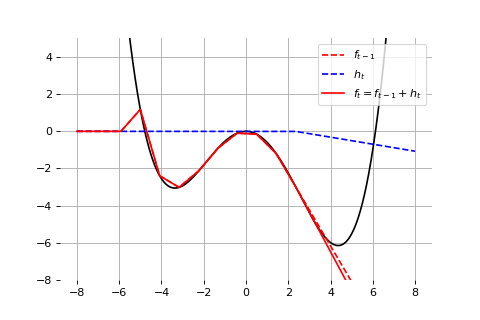

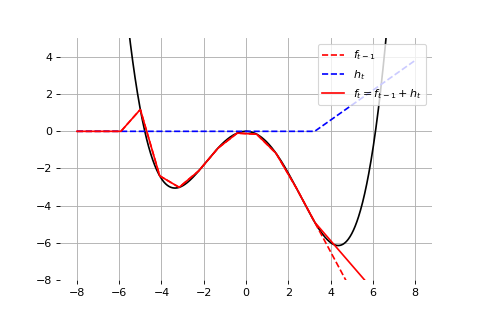

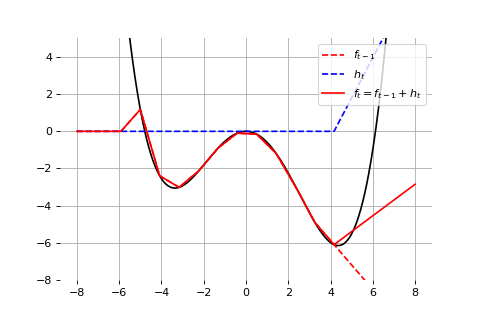

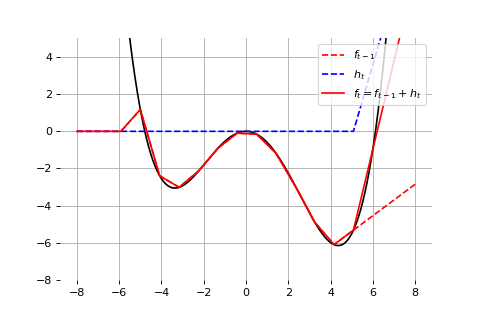

Example: Universal approximation

Exercises

shorturl.at/yBW16